It's #qcon London!!

We're here with a booth and talks on #ZeroTrust and #apacheflink.

Also meeting old friends Nf colleagues!

Recent searches

Search options

#ApacheFlink

https://www.europesays.com/1924771/ New Confluent Cloud for Apache Flink® Capabilities Simplify Real-Time AI Development #AI #ApacheFlink #ArtificialIntelligence #Confluent #DataScience #DataStreamingPlatform #Inc. #ML

Behind Netflix's Impressions: The Data-Driven Engine Powering Personalization

Netflix's new blog series dives deep into the technology behind its impression tracking system, revealing how the streaming giant processes billions of data points daily to enhance user experience. Wi...

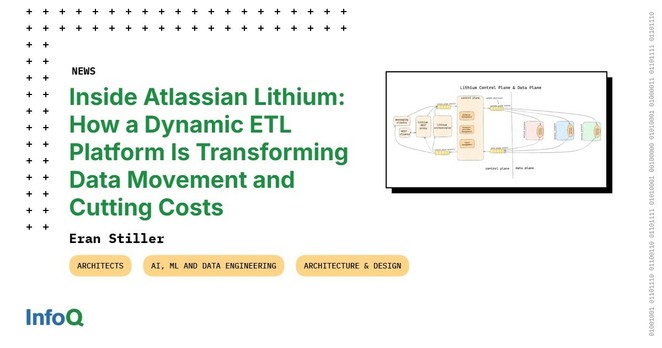

Atlassian introduced Lithium - an in-house #ETL platform designed to meet the requirements of dynamic data movement.

Lithium simplifies cloud migrations, scheduled backups, and in-flight data validations with ephemeral pipelines and tenant-level isolation - ensuring efficiency, scalability & cost savings.

InfoQ spoke with Niraj Mishra, Principal Engineer at Atlassian, about Lithium’s implementation and future.

Read more here: https://bit.ly/415RPYZ

#DataPipelines #KafkaStreams #ApacheKafka #ApacheFlink #SoftwareArchitecture

Spent this morning digging into a #ApacheFlink job in production. I just love this technology, both in development and operation. Give it a try!

An early Christmas present for y'all: a new blog in which I explore Flink CDC. Building data pipelines declaratively is pretty nice, but is it ready for prime-time? Let's find out!

This role will let me build on my recent work, dive into new tech, and support the next wave of #openSource innovation. While I may be shifting gears, I’m looking forward to continued collaboration with the wonderful #apacheKafka and #apacheFlink

communities.

The October issue of #CheckpointChronicle is now out

It covers Ververica's Fluss, #ApacheFlink 2.0, Iggy.rs, Strimzi's support for #ApacheKafka 4.0, tons of OTF material from @vanlightly, Christian Hollinger's write up of ngrok's data platform, nice detail of how SmartNews use #ApacheIceberg with Flink and #ApacheSpark, a good writeup from Sudhendu Pandey on #ApachePolaris, notes from Kir Titievsky on Kafka's Avro serialisers, and much more!

It's lunch time at the datastream summit!

Leave the data, take the cannoli

#datastreaming #apacheflink #apachepulsar

I'm at the streaming data summit and Taylor Swift is the topic of the conversation.

What is Taylor Swift to us?

A traffic spike!

#apachepulsar #apacheflink

It's the formal opening of @FlinkFoward!

If you're here, the opening talks are starting!! #apacheflink #datastreaming #realtime!

I'm a sucker for a nice plushy. And these two are from #current24 and reprisent my favourite raft powered distributed systems, #TiDB and #Redpanda!

Both which work great with @ververicadata and #apacheflink

Part two of the journey to #current24, getting off the plane...

I'll see most of you all tomorrow, though some will be at the meet up this evening.

Ping me to chat nonsense about

#apacheflink, #apachePaimon and #apachekafka

Wednesday September 18, 2024 at 9am PDT | 12noon EDT | 6pm CEST

Come learn **#ApacheKafka** with @celeste ! In this 2 hour workshop you’ll learn the basic components of Kafka and how to get started with data streaming using Python. We'll also give a brief introduction to transforming your data using **#ApacheFlink**.

Read more and register https://aiven.io/workshop/movie-recommender-with-tensorflow-pgvector?utm_source=mastodon&utm_medium=organic&utm_campaign=workshop-movie-recommender

Latest version of my Whisky clustering using Apache projects talk:

https://speakerdeck.com/paulk/groovy-whiskey

Tickets are still available for CoCEU. #apachecon #communityovercode #apachewayang #ApacheFlink #ApacheSpark #ApacheBeam #ApacheIgnite #ApacheCommons @ApacheGroovy #opensource #machinelearning #groovylang

Some notes on implementing asynchronous Python callbacks in #apacheFlink https://nowave.it/async-io-with-pyflink.html

#CaseStudy - Discover how #Yelp reworked its data streaming architecture with #ApacheBeam & #ApacheFlink!

The company replaced a fragmented set of data pipelines for streaming transactional data into its analytical systems, like Amazon Redshift and in-house data lake, using Apache data streaming projects to create a unified and flexible solution.

Dive into the details: https://bit.ly/3WgkTL7

Do you know SQL? Exactly!

Most databases and data processing tools support SQL for exactly that reason. And we see a strong movement for all of them to get closer to the standard, day by day.

In this weeks episode of the Cloud Commute podcast, our host @noctarius2k talks with @gunnarmorling from #Decodable about the benefits of #SQL, how #CDC (change data capture) works and why Decodable uses #ApacheFlink as the underlying technology for its #StreamProcessing offering.

Just another day on the #ApacheFlink user mailing list

For better or worse, understanding JARs is an unavoidable first step to using Flink if you're running it yourself.

That's why I wrote about it a lot

https://www.decodable.co/blog/flink-sql-and-the-joy-of-jars

https://www.decodable.co/blog/flink-sql-misconfiguration-misunderstanding-and-mishaps

Blogged: Flink SQL—Misconfiguration, Misunderstanding, and Mishaps

Pull up a comfy chair, grab a mug of tea, and settle in to read about my adventures troubleshooting some gnarly #ApacheFlink problems ranging from the simple to the ridiculous…

https://dcbl.link/troubleshooting-flinksql2

Topics include:

What's Running Where? (Fun with Java Versions)

What's Running Where? (Fun with JAR dependencies)

What's Running Where? (Not So Much Fun with Hive MetaStore)