Chuck Darwin<p><a href="https://c.im/tags/BigCode" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>BigCode</span></a> is an open scientific collaboration working on responsible training of large language models for coding applications. </p><p>In this organization you can find the artefacts of this collaboration:<br>👉 <a href="https://c.im/tags/StarCoder" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>StarCoder</span></a>, a state-of-the-art language model for code, <br>👉 The <a href="https://c.im/tags/Stack" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>Stack</span></a>, the largest available pretraining dataset with perimssive code, and 👉 <a href="https://c.im/tags/SantaCoder" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>SantaCoder</span></a>, a 1.1B parameter model for code.</p><p><a href="https://c.im/tags/StarCoder" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>StarCoder</span></a> is a 15.5B parameters language model for code trained for 1T tokens on 80+ programming languages. <br>It uses MQA for efficient generation, has 8,192 tokens context window and can do fill-in-the-middle.</p><p>Chat with StarCoder here: <a href="https://huggingface.co/chat/?model=bigcode/starcoder" rel="nofollow noopener noreferrer" target="_blank"><span class="invisible">https://</span><span class="ellipsis">huggingface.co/chat/?model=big</span><span class="invisible">code/starcoder</span></a></p><p><a href="https://huggingface.co/bigcode" rel="nofollow noopener noreferrer" target="_blank"><span class="invisible">https://</span><span class="">huggingface.co/bigcode</span><span class="invisible"></span></a></p>

Recent searches

No recent searches

Search options

Only available when logged in.

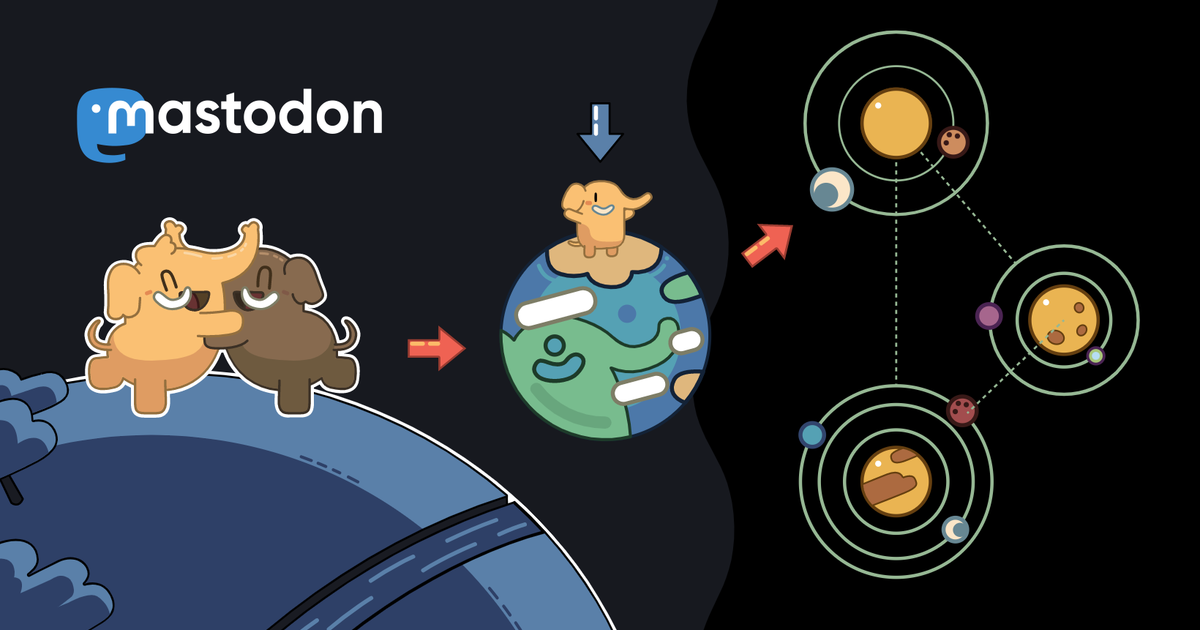

mstdn.social is one of the many independent Mastodon servers you can use to participate in the fediverse.

A general-purpose Mastodon server with a 500 character limit. All languages are welcome.

Administered by:

Server stats:

13Kactive users

mstdn.social: About · Status · Profiles directory · Privacy policy

Mastodon: About · Get the app · Keyboard shortcuts · View source code · v4.3.8

#santacoder

0 posts · 0 participants · 0 posts today

Alex Chaffee<p>There are quite a few code-generating “AI” systems now — GitHub <a href="https://ruby.social/tags/CoPilot" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>CoPilot</span></a>, Amazon <a href="https://ruby.social/tags/CodeWhisperer" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>CodeWhisperer</span></a>, BigCode <a href="https://ruby.social/tags/SantaCoder" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>SantaCoder</span></a>, Facebook <a href="https://ruby.social/tags/Incoder" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>Incoder</span></a>, maybe even more.</p><p>I wonder how hard it would be to get these BS bots to play <a href="https://ruby.social/tags/TDD" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>TDD</span></a> ping-pong… I write a test, then they generate code until all tests pass, then i refactor, then we repeat.</p>

Vincent HETRU<p><a href="https://sigmoid.social/tags/HuggingFace" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>HuggingFace</span></a> just released the <a href="https://sigmoid.social/tags/SantaCoder" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>SantaCoder</span></a> models for the holiday season. Part of the <a href="https://sigmoid.social/tags/BigCode" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>BigCode</span></a> project, these 1.1B parameter models are trained on <a href="https://sigmoid.social/tags/Python" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>Python</span></a>, <a href="https://sigmoid.social/tags/Java" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>Java</span></a>, and <a href="https://sigmoid.social/tags/JavaScript" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>JavaScript</span></a> and use advanced techniques like near-deduplication and comment-to-code ratio. </p><p><a href="https://huggingface.co/bigcode/santacoder" rel="nofollow noopener noreferrer" target="_blank"><span class="invisible">https://</span><span class="ellipsis">huggingface.co/bigcode/santaco</span><span class="invisible">der</span></a></p><p><a href="https://sigmoid.social/tags/AI" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>AI</span></a> <a href="https://sigmoid.social/tags/DeepLearning" class="mention hashtag" rel="nofollow noopener noreferrer" target="_blank">#<span>DeepLearning</span></a> 🤗</p>

ExploreLive feeds

Mastodon is the best way to keep up with what's happening.

Follow anyone across the fediverse and see it all in chronological order. No algorithms, ads, or clickbait in sight.

Create accountLoginDrag & drop to upload